Launching the privacy focused AI chat platform

Cognos encrypts your chats with different AI models so you can keep your privacy. With our security you are confident that your messages won't be viewed, leaked, hacked or sold.

Generative AI is changing how we all create content and being integrated everywhere, but as the companies producing these models reach the limits of what is possible with open data, they increasingly rely on the information you are giving them to train their future models. This presents a bit of a privacy nightmare for individuals and companies who want to use AI but value their privacy and confidentiality.

Employees of companies such as OpenAI and Google can - and do - access your messages you send through their products such as ChatGPT and Gemini.

On top of that, your chat histories could be accidentally leaked (this has already happened to OpenAI), stolen by hackers, sold by the software you're using or acquired by a bigger company (like when Google acquired Nest).

Are you going to continue risking your personal data?

If you have the technical knowledge and a few hundred/thousand dollars to spend on hardware, it is possible to find open source software that you can setup and run yourself, ensuring your data never leaves your own private garden. For the truly paranoid, running everything yourself is the best option.

But what about people who:

- Want to use generative AI;

- But also want to preserve as much of their privacy as possible;

- Can't, or don't want to, run and maintain AI models or software themselves;

Cognos is the privacy-focused alternative that tries to offer the convenience of cloud AI but encrypts your chats so that only you can access them.

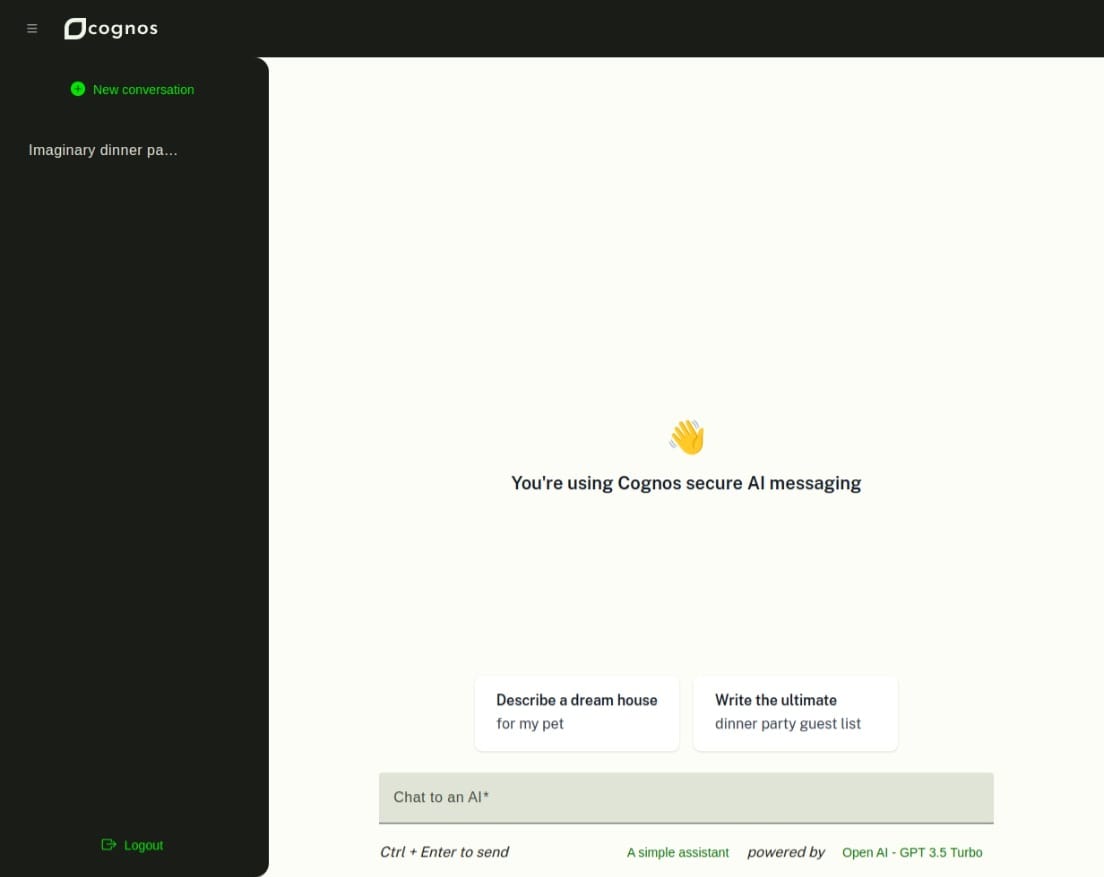

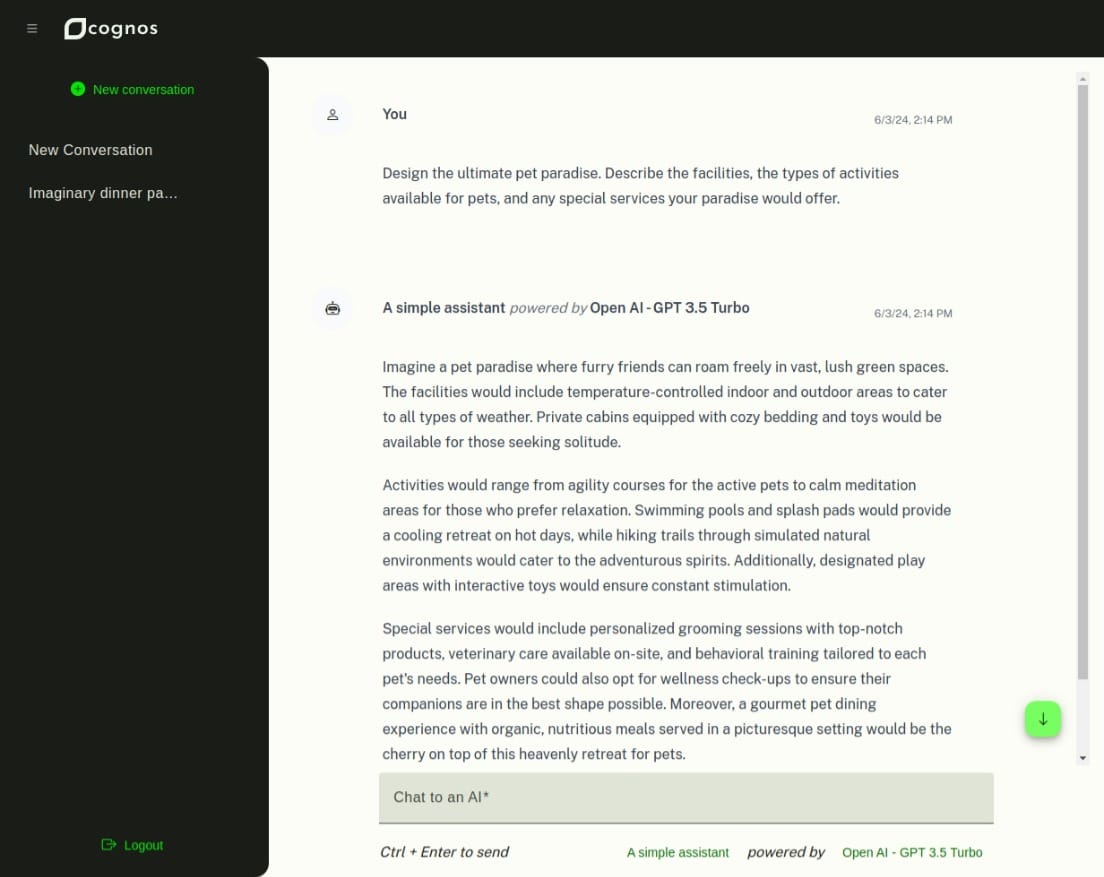

Demo of our Beta

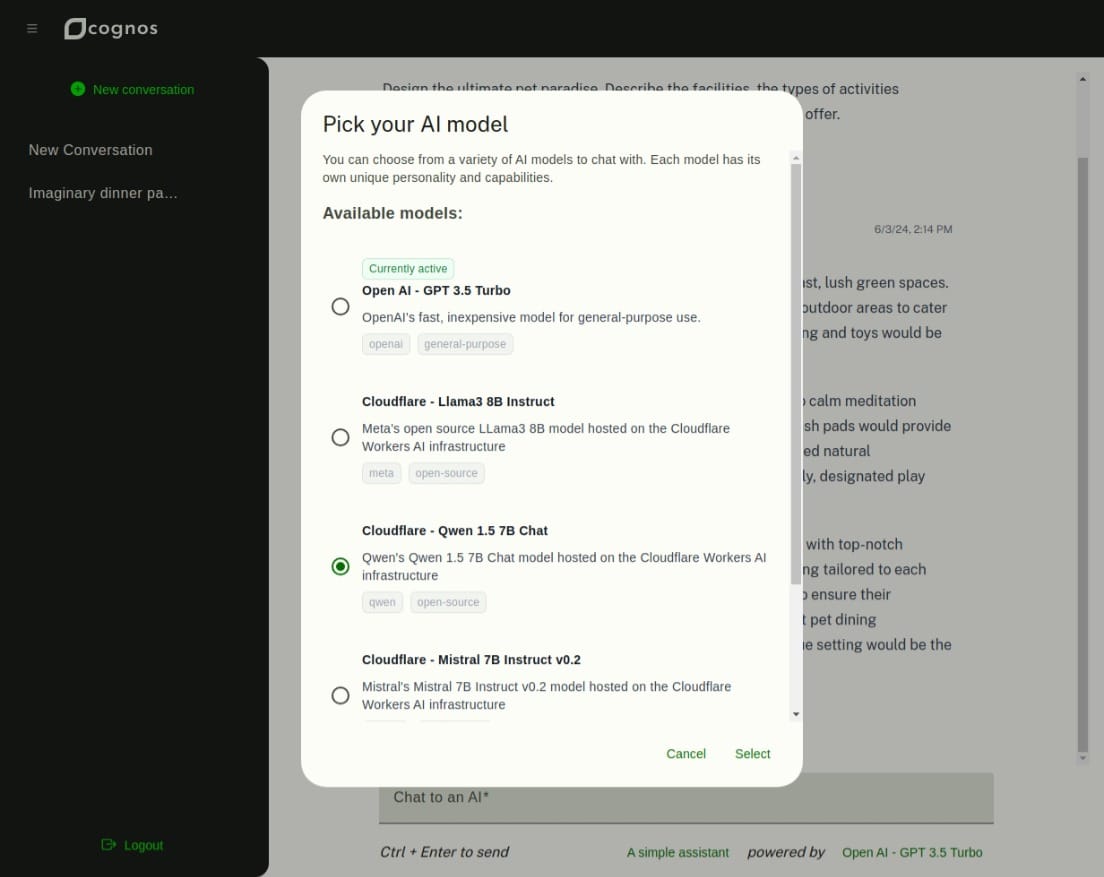

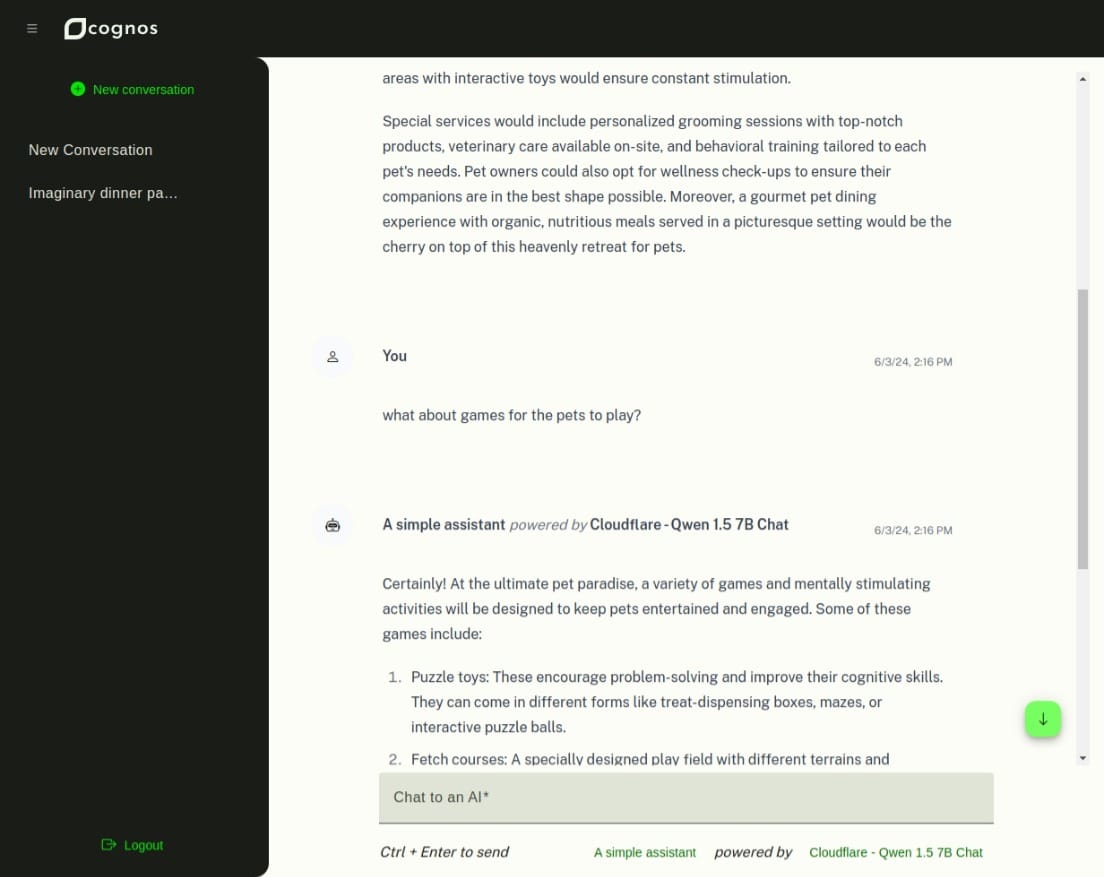

It's nice to know what is being offered without having to sign up so please find some screenshots below so you know what to expect. This is a beta project though so there is only basic chat features at the moment.

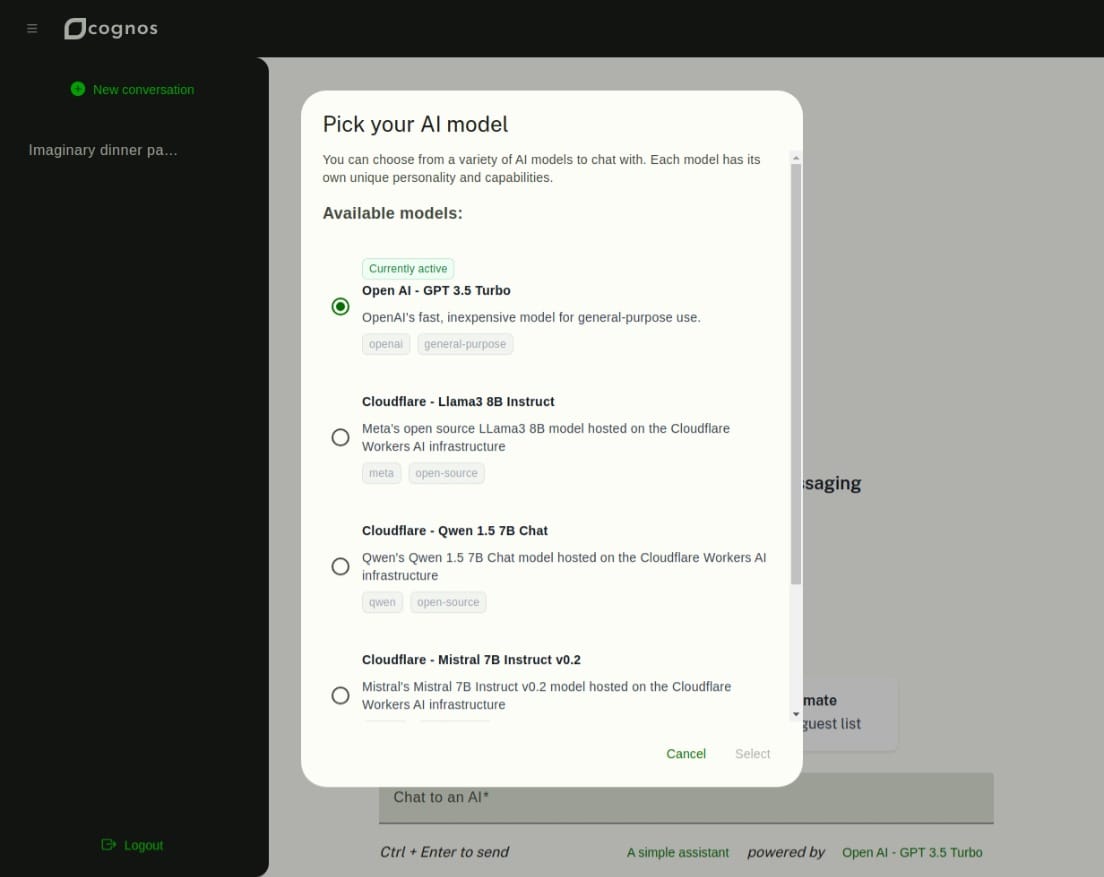

Screenshots of Cognos encrypted AI chats. Using multiple models in a single conversation.

Feature list

The basic product is now live with the following features (but there is lots more planned).

Message encryption by default

Our core value is encrypting your chat messages ensuring your conversations are private by default.

Separate conversations

Separate your chats into individual conversations, all encrypted with their own unique key-pair.

Use different AI models in the same conversation

When using products such as Gemini or ChatGPT you are limited only to models from Google and OpenAI respectively. If you want to use both you may need to sign up to both products.

Cognos allows you to choose which AI model you wish to use on a per-message basis. This means you can start your conversation with a general AI but switch to using a coding specialist at any point without having to start a new conversation.

Currently have only a handful of lightweight models are active but as we leave Beta stage you will quickly have more AI options.

Can we get feature X ?

We have big plans but we want to hear from you to help us prioritize what to build. Send Ewan an email detailing your feature idea and use case so we can better understand and notify you if we add it.

Security

Cognos can't claim to secure your messages without explaining the security involved and demonstrating that there is zero unauthorized access to your messages.

The simple version

It's important to note that this is not end-to-end encrypted (such as WhatsApp or Signal) as the AI models you choose to interact with - currently - need to receive your messages in plain-text.

Instead, a layer of encryption is added on top like ProtonMail/Tuta/Skiff do for email. If you're familiar with such services, they take emails to you which are unencrypted and encrypt them so only you can read them. When you send an email to a non ProtonMail account, this is also sent unencrypted.

Cognos does something similar, meaning that the messages you send to our servers and to the AI are in plain text (although the connection between you, us and the AI provider is secured with HTTPS to prevent eavesdropping) but after that they are encrypted so you are the only person who can decide who can access your messages.

The technical version

You start by creating a user account on our external identity provider (IDP) Ory who are ISO 27001 and SOC 2 Type II certified.

When you first create an account you are asked for a "Vault Password". This never leaves your device and is used to symmetrically encrypt and decrypt your secret key. To make sure this has enough entropy we first hash your vault password with Argon2id and the parameters recommended by OWASP. This turns your Vault Password into an alphanumeric, fixed length string that will be used as a NaCl Secretbox key. To combat rainbow table attacks your user UUID (generated by Ory) is used as the Salt.

After hashing your Vault Password a Curve25519 public/secret key pair is generated on your device. This needs to be stored on the server so it can be shared between different devices so your hashed Vault Password is used to encrypt the secret key using an NaCl Secretbox - XSalsa20 cipher combined with Poly1305 message authentication. Your plaintext public key and encrypted secret key are now sent to and stored on the server for future use.

When you create a conversation, another unique Curve25519 public/secret key pair is generated for that conversation. The conversation public key is also sent to the server in plaintext however this time a shared key is generated from the conversation public key and your secret key. This shared key is used with an NaCl Box to encrypt the conversation secret key with public-key cryptography. While not supported at this time, in the future this will allow you to invite other users to a shared, encrypted conversation. Each user will have access to the conversation secret key which is encrypted with their personal secret key.

Sending a message involves transmitting the message to the server in plaintext over TLS encrypted connections. Your plaintext message is encrypted with the conversation public key and saved before being forward to your AI model of choice, again in plaintext over an TLS encrypted connection. When the AI model has generated a response, this is returned to our server. This response is also encrypted with the conversation public key and saved before being forwarded back to you.

No plain text copies of your messages or the AI responses are stored or logged.

Using this approach, when you next sign in to access your messages we:

- Hash your Vault Password with

Argon2idsalted with your userUUID; - Retrieve your

Curve25519public and encrypted secret key pair; - Decrypt your secret key using your hashed Vault Password;

- Retrieve the

Curve25519public and encrypted secret key pair for the conversation you are trying to access; - Use your secret key and the conversation public key to decrypt the conversation secret key;

- Retrieve the

XSalsa20Poly1305encrypted messages for the conversation; - Use the decrypted conversation secret key to decrypt all messages;

Your messages are only readable by you and - in the future - the people you choose to share your conversation with. Cognos can't read your messages or use them to train any AI. In the event of a data leak nobody can decrypt your messages. Hackers cannot decrypt your messages (although there is a risk to you if you are using an insecure Vault Password).

AI Model list

You can currently choose from the following providers and models:

- OpenAI:

- GPT-3.5-Turbo

- Cloudflare:

- Meta/LLama3-8B-instruct

- Mistral/Mistral-7B-instruct

- Deepseek-AI/Deepseek-math-7B-instruct

- Qwen/Qwen1.5-7B-Chat

We are working to add and release these during the Beta:

- Google:

- Gemini-1.5-Flash

- Anthropic:

- Claude3-Haiku

When going live there will also be additional larger models and others will be continuously added to our offering, based on user demand and new releases from providers.

Privacy policies of AI providers

You may have concerns about sending your data to our upstream AI providers and that is understandable. In the mid-to-long term we want to have our own hardware to run models on Cognos owned infrastructure but this is expensive and not possible to do this from the beginning.

For those who don't know, using the API - like Cognos does - of services like OpenAI often gives you immediate privacy benefits versus using their products like ChatGPT, as they will not use it for their training data. Unfortunately however:

[...] data submitted to OpenAI’s services through automated content classifiers and safety tools, including to better understand how our services are used. The classifications created are metadata about the business data but do not contain any of the business data itself. Business data is only subject to human review as described below on a service-by-service basis.

You have the choice about where to draw the line when it comes to your data. Perhaps you are happy to have some messages sent to OpenAI or Google. Perhaps you prefer to er on the side of caution and use only open source models hosted on Cloudflare, Lightning AI or similar. It's up to you and we'll give you as many options as possible.

A little about me and why I'm doing this

I'm Ewan a Scottish software engineer living in Luzern, Switzerland and I'm currently working full-time building projects to have an impact.

Cognos was built to satisfy my own itch of wanting to use generative AI in a more privacy friendly way. While I would have the skills and knowledge to build my own AI setup I have decided not to spend the time or the money. It's also not a valid option for my non-technical friends or family.

This is my attempt to bring confidentiality to AI chats and it is currently being used by me and my family. I have no interest in what any of my users are talking to AI about.

If you want to talk to me about Cognos I'm happy to hear from you via:

- Email: ewan@cognos.io

- Threema: NM4AVD9N

Frequently asked questions (FAQ)

Is this end-to-end encrypted?

No. It is important to note that this service does require you to trust us and the AI service providers as your messages have to be sent to the AI in plain text.

Like services such as ProtonMail we encrypt as soon as we can to maximize your privacy.

I've found a bug or security issue, what do I do?

Please contact Ewan - the founder - directly to responsibly disclose any bugs or security vulnerabilities. We are at an early stage at the moment however we will work together with you to fix this quickly and try to find a suitable way to reward you for your work.

Thanks in advance.

How do I contact you?

ewan@cognos.io - that's the email address of the founder and person who built Cognos. He should be able to help you.

What about the privacy of my messages with the AI providers?

At this time we rely on external providers such as OpenAI, Google and Cloudflare, amongst others, to host the AI models. This may seem strange but the APIs are under different privacy policies than their products. ChatGPT data will be used for training however data sent through the API (that we access) will not. Wherever possible we will also push for zero data retention with upstream providers.

We give you the choice of which model you wish to use so - for example - if you don't wish to send any information to Google you don't have to use their AI.

In the future we also plan to run our own hardware so we can host models and offer as much privacy and security as we can.

How do you handle moderation, abuse and user safety?

By default we try to avoid any additional content parsing that may weaken user privacy however as a consumer of external providers we must also conform with their guidelines to avoid abuse. This is also to avoid our API keys being blocked. For example, content sent to the OpenAI API will first be examined by the OpenAI moderation API to check if the text is potentially harmful.

We are working to additionally highlight this so that users can make an informed decision when choosing a model.

Additionally we want to provide users the ability to "report" AI generated content to us for review. This is opt-in but will involve allowing Cognos to decrypt the conversation in question. Without this, we are unable to see any content whether sent by you or generated by an AI.

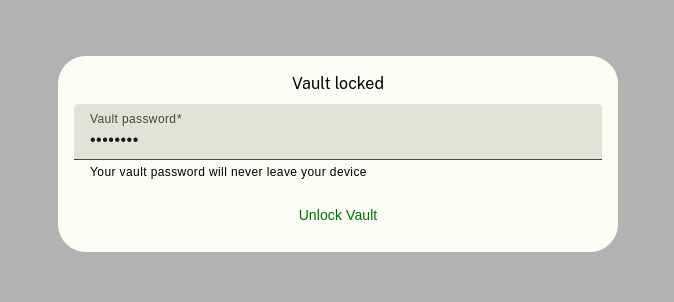

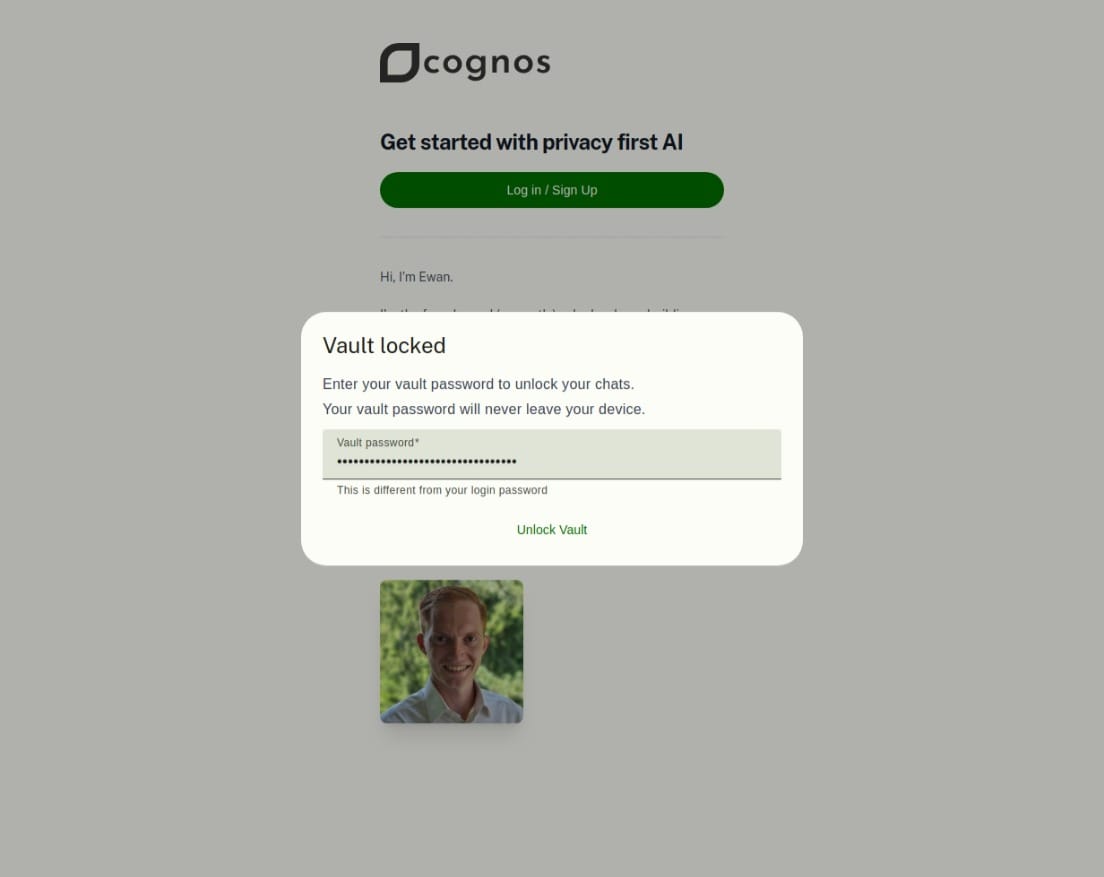

Why do I need two passwords? That's just annoying

Having a second password does add some extra friction - sorry about that.

Our setup is designed to bring you extra security. The first password you use to sign in and this goes to the trusted identity provider. Combined with your username, this proves who you are.

The second password never leaves your device and is used to encrypt and decrypt your private messages.

By having separate passwords, one of which stays on your device, we ensure that there is no chance your chat password could be leaked to anyone else.

Why is this free? Aren't you using my data if the product is free?

For other products that is the case however in this situation we are testing our imperfect product. For that reason Ewan - the founder - is personally covering the costs at this time.

Your data cannot be used or sold, now or in the future. That's the point of this service 😎.

How much will this cost?

The Basic plan will be priced at $8/month and get you access to fast, lightweight models like GPT3.5-turbo and LLama3 7B.

We're aiming to be competitive with services such as ChatGPT and Gemini so the Premium tier (access to more complex, expensive models like GPT-4) will be $25/month.

Other than the differences in models the plans will have the same features and offer the same level of high security and privacy.

Will you offer family/business/team plans?

Yes, we are working on collaborative features and will make these available for users under a "Team" plan.

Team plans will require a minimum of 4 users and be priced at $5/user/month for the Basic plan and $17/user/month for the Premium tier.

ChatGPT and Gemini are free, why would I pay for this?

Because you don't want your AI chats leaked, sold or analyzed. We can guarantee this whereas these "free" services make their money from doing so.

Can I invest or support you financially?

That's very kind of you. At this time we're not raising investment but please show your interest via email so we can let you know if this changes.

You can also sign up for a paid subscription on this site to pre-purchase and help support financially.

Can I use this for work?

You probably have to check your company policy but from our side - yes, go ahead!

If you are looking for more business functionality such as teams and user management please contact us with your requirements as we have some of these features in the pipeline.

I lost my vault password, can you help me recover it?

Unfortunately no, we can't.

Due to how our system is built we cannot access your messages or recover your Vault Password. Please keep this in a safe place and don't lose it.

Can you see/leak/sell my data?

No. We get your data in plain text but it's immediately encrypted.

Once encrypted you are the only one who can get access to it. We can't sell it. Any leaks will not matter (as other people who don't have your key won't be able to decrypt it) and we can't see it.

Can I use images/files with this?

Currently no. We are releasing with a proof-of-concept of text generation however in the future we plan to expand into images and other content such as GPT-4o, and Gemini Pro.

Is this encryption post-quantum resistant?

No, our current proof-of-concept setup is secure today but not post-quantum resistant.

We are aware of this and if Cognos becomes popular we will take steps to add this additional layer of security.

Do you offer an API? Better yet, an OpenAI compatible API?

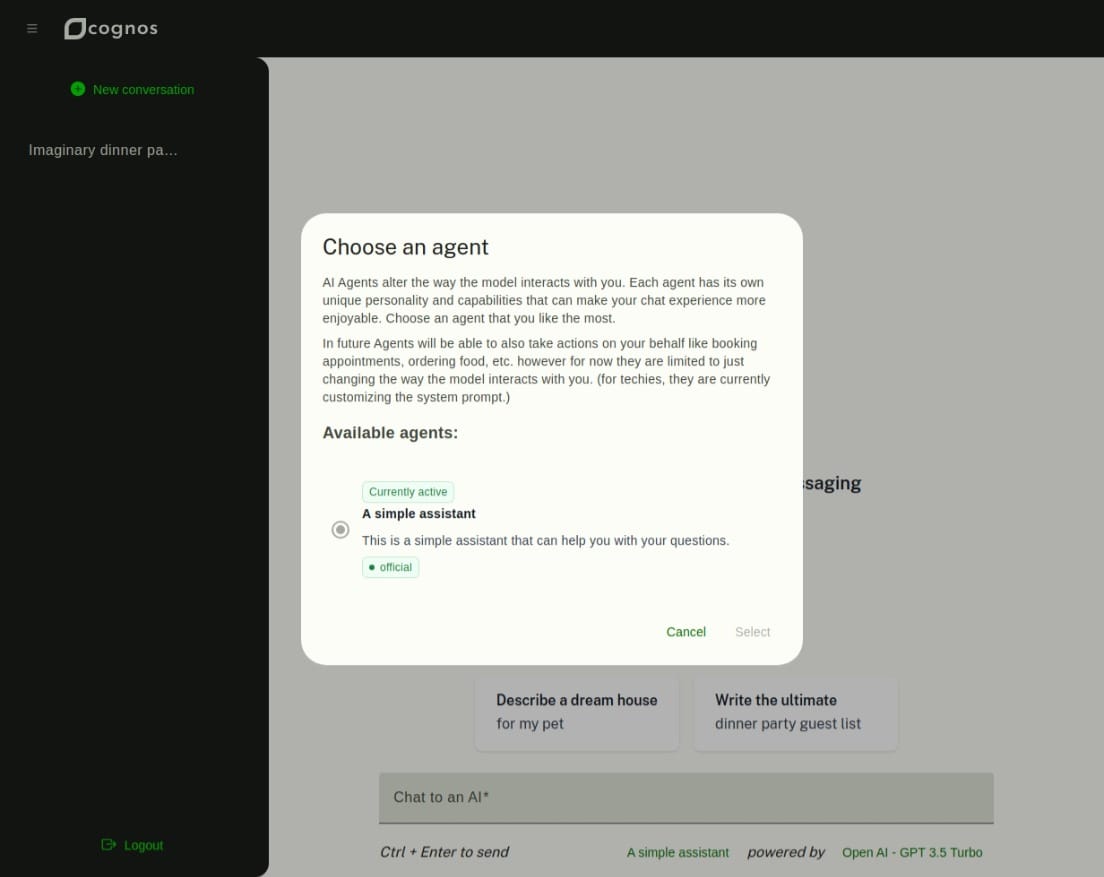

Actually our API is already exposed as an OpenAI compatible API which has been augmented with some additional metadata to assign your message to a conversation and attach an Agent.

This will not work with off-the-shelf OpenAI API tools yet however it is our mid-term plan to make this as easy as possible. Our idea is to let you use Cognos models, Agents and encryption with your own front-end tool.